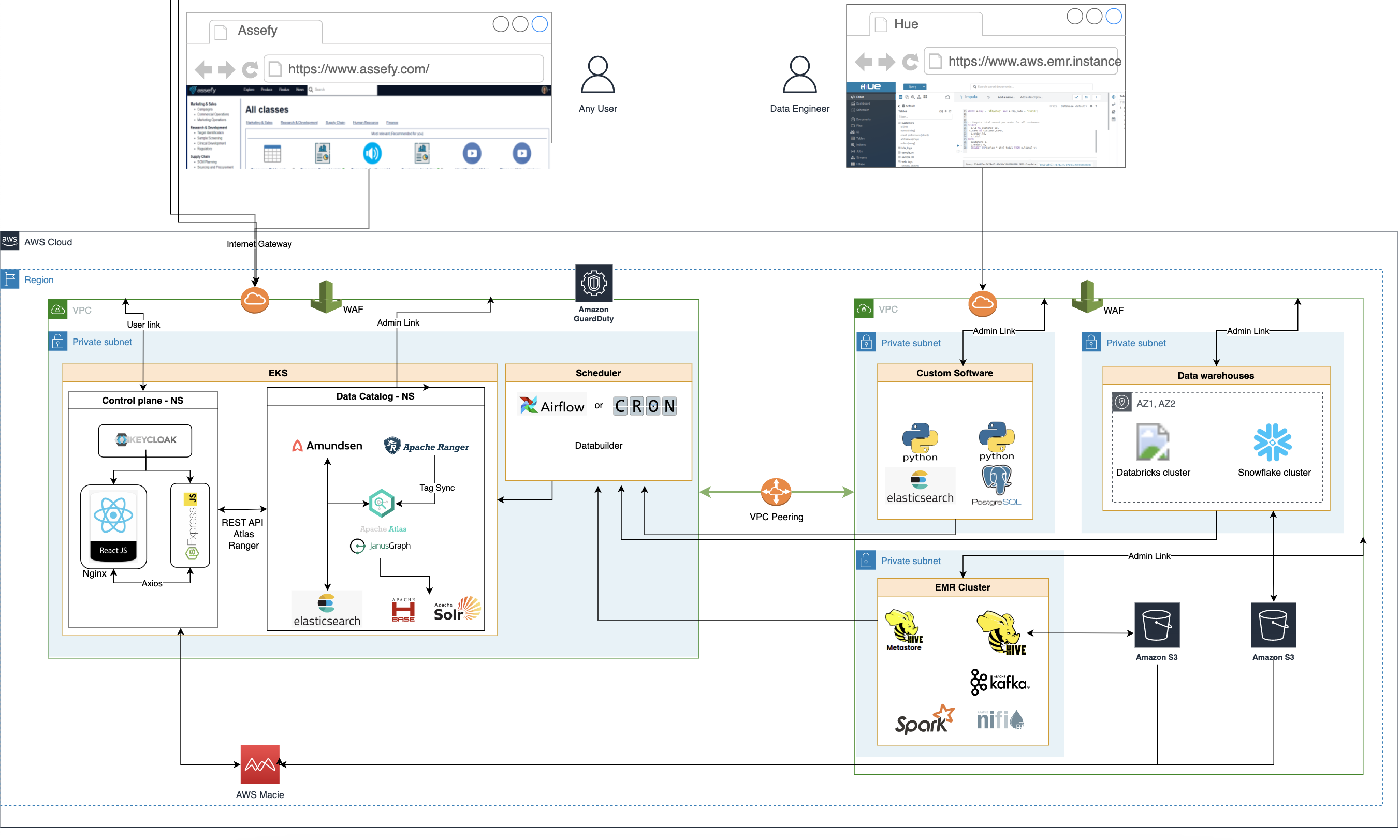

Architecture design of a Data Catalog system in AWS. The objective of the project is to ingest metadata from all kinds of data origins. This includes relations databases, datawarehouses, any other kind of database, data files and media such as videos, audio or images.

Given the state of the art at the moment of designing the solution, the best option has been to use Amundsen data catalog substituting its default Neo4J backend by Apache Atlas as the backend. Amundsen and Apache Atlas are both FASS. They have rather a big community in behind and can be used in combination to provide a very robust and flexible data catalog solution.

Amundsen has features that Atlas does not include. For example: it has ranked search, it provides additional fields in datasets that makes the data catalog more of a social tool. Atlas is also a Data catalog itself but its designed to be used by users who are more technical. However, Apache Atlas knowledge graph is more complete than the default Nee4j knowledge graph of Amundsen. For this reason using Apache Atlas as the backend for the knowledge graph of Amundsen provides the experience of knowledge graph modelling of Apache Atlas with the end user orientation of Amundsen, which includes a much more useful search for a large team.

Data Catalog as solution to any client use case

The value of the proposed architecture resides in that it is relatively easy to deploy our solution to any cloud that a client might be already using. This is possible by deploying the an already designed data catalog solution that uses Amundsen and then fin-tune the solution to ingest the specific metadata for that client with the help of the databuilder Python library.

The value is also in the fact that we also provide a React web UI that is even more user friendly than the Amudnsen one. This allows to add more functionalities and features to classify, share, rate and search the metadata, while at the same time, preserving the access to control planes from Apache Atlas or Apache Ranger for other more technical users inside of the team. Using Apache Atlas enables us to expand the data catalog fields to support all the new functionalities that the React application feature.

For those interested in how Apache Atlas gives support to Amundsen as a backend I have created a Google Sheets that contains each of the Types that Atlas Creates for Amundsen on top of the default ones: Amundsen Types in Apache Atlas